India’s digital ecosystem is preparing for another major regulatory shift with the release of the Draft IT (Digital Code) Rules 2026. As online platforms become central to communication, entertainment, education, and commerce, concerns around harmful content, platform accountability, and the safety of young users have grown sharper. The proposed rules seek to modernise India’s internet governance framework by tightening oversight of online content while placing a strong emphasis on child and youth protection in the digital space.

Why New Digital Rules Were Needed

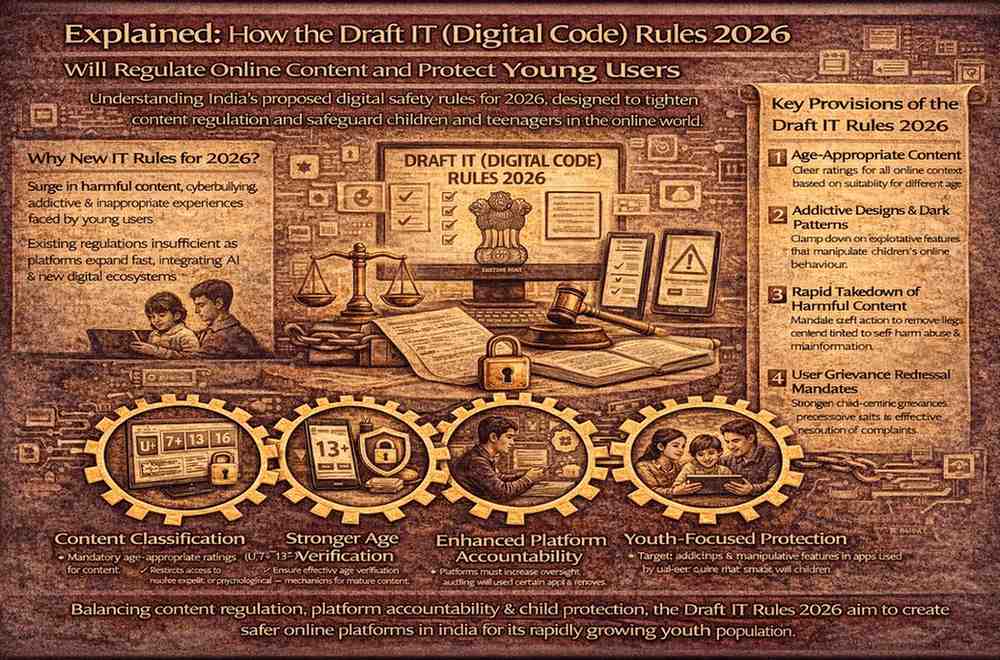

The scale and speed of online content creation in India have far outpaced existing regulatory mechanisms. Social media platforms, video-sharing apps, gaming ecosystems, and AI-driven content tools now reach hundreds of millions of users daily, including children and teenagers. While this expansion has enabled creativity and access, it has also amplified risks such as harmful content exposure, misinformation, cyberbullying, and addictive digital behaviours.

The Draft IT (Digital Code) Rules 2026 are intended to address these challenges by updating obligations for platforms and clarifying the rights and protections available to users. The rules reflect the government’s view that self-regulation alone is no longer sufficient in a highly algorithm-driven content economy.

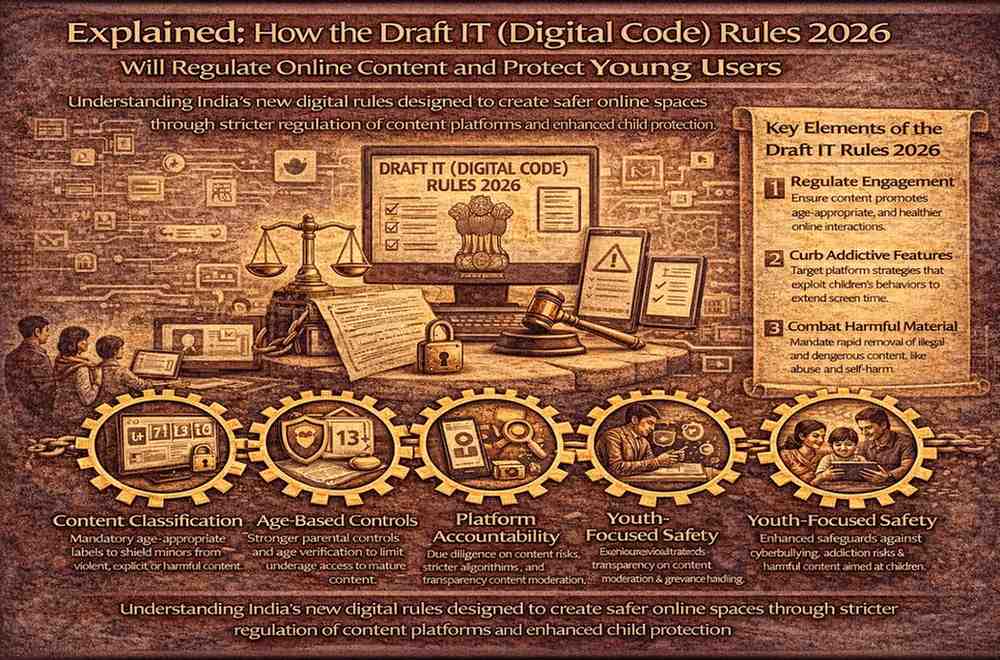

What the Draft IT (Digital Code) Rules 2026 Cover

The proposed digital code expands the scope of regulation beyond traditional social media to include content intermediaries, video platforms, online gaming services, and emerging AI-powered content systems. It introduces a more comprehensive framework for how platforms host, recommend, moderate, and remove content.

The rules also seek to harmonise content governance with broader digital safety goals. Instead of focusing only on takedowns, the framework emphasises prevention, accountability, and user empowerment, particularly for minors and vulnerable users.

Stronger Content Classification and Age-Based Controls

A key feature of the draft rules is mandatory content classification. Platforms will be required to label content based on age-appropriateness and sensitivity, allowing users and parents to make informed choices. This move is designed to reduce accidental exposure of minors to violent, explicit, or psychologically harmful material.

Age-based access controls are expected to become more robust, with platforms responsible for implementing reasonable age verification and parental control tools. While exact technical standards may evolve, the intent is to ensure that content consumption aligns more closely with a user’s age and maturity level.

Platform Accountability and Due Diligence

Under the draft rules, online platforms face expanded due diligence obligations. They are expected to actively monitor content risks, respond promptly to user complaints, and maintain transparent content moderation processes. The emphasis is on proactive responsibility rather than reactive compliance.

Platforms will also need to clearly disclose how their algorithms recommend content, especially when such systems influence what young users see most frequently. This is aimed at addressing concerns around algorithmic amplification of harmful or addictive content.

Special Safeguards for Children and Teenagers

Protecting young users sits at the centre of the Draft IT (Digital Code) Rules 2026. Platforms are expected to design child-friendly interfaces, limit intrusive data collection, and restrict targeted advertising aimed at minors.

There is also a focus on mental well-being. Features that encourage excessive screen time or exploit behavioural vulnerabilities among children may face stricter scrutiny. By shifting responsibility onto platforms, the rules aim to create safer digital environments without placing the entire burden on parents or schools.

Tighter Rules Around Harmful and Illegal Content

The draft code strengthens requirements for dealing with illegal and harmful content, including material related to abuse, exploitation, self-harm, and severe misinformation. Platforms must act swiftly once such content is identified, either through automated systems or user reports.

Importantly, the framework attempts to balance regulation with freedom of expression. The intent is not blanket censorship but structured oversight, ensuring that harmful content is addressed while legitimate speech is protected through clear procedures and appeal mechanisms.

Transparency, Reporting, and User Grievance Redressal

Transparency is a cornerstone of the proposed rules. Platforms will be required to publish regular reports detailing content moderation actions, complaints received, and steps taken to protect users. This public reporting is expected to improve accountability and enable informed policy discussions.

User grievance redressal mechanisms are also being strengthened. Clear timelines for complaint resolution and escalation pathways are intended to ensure that users, including parents acting on behalf of minors, are not left without recourse when issues arise.

Role of the Government and Regulators

The implementation and oversight of the Draft IT (Digital Code) Rules 2026 will involve coordination across government agencies, with policy leadership expected from the Ministry of Electronics and Information Technology. The rules propose structured engagement between regulators and platforms, including compliance audits and consultation mechanisms.

Rather than micromanaging content, the government’s role is positioned as setting standards, monitoring systemic risks, and intervening where platforms consistently fail to meet their obligations.

Concerns and Debates Around the Draft Rules

As with any major digital regulation, the draft rules have sparked debate. Technology companies have raised concerns about compliance costs, technical feasibility of age verification, and potential impact on innovation. Civil society groups, meanwhile, stress the need to ensure that safeguards against harmful content do not become tools for overreach or suppression of dissent.

The final shape of the rules is likely to evolve through stakeholder consultations. How effectively the balance between safety, privacy, and free expression is maintained will determine public trust in the framework.

What This Means for Platforms and Creators

For online platforms and content creators, the Draft IT (Digital Code) Rules 2026 signal a shift toward higher responsibility and compliance. Content strategies, recommendation systems, and audience engagement practices may need reassessment, particularly when minors form a significant part of the user base.

Creators may also need to become more mindful of content classification and disclosures, as platforms tighten enforcement to meet regulatory expectations.

What Parents and Young Users Should Know

For parents and young users, the draft rules promise stronger protections and clearer rights. Enhanced parental controls, safer defaults, and more responsive grievance systems could make digital spaces less overwhelming and more secure for children.

However, digital literacy will remain important. Regulations can reduce risk, but informed use and awareness will continue to play a critical role in online safety.

The Road Ahead

The Draft IT (Digital Code) Rules 2026 represent a significant step in India’s approach to internet governance. By focusing on platform accountability and youth protection, the framework acknowledges the realities of a digitally native generation growing up online.

Explained simply, the rules aim to make the internet safer without stripping it of openness and creativity. Their ultimate impact will depend on thoughtful implementation, ongoing dialogue, and the willingness of platforms, regulators, and users to share responsibility for building a healthier digital ecosystem in India.

Add digitalherald.in as preferred source on google – click here

Last Updated on: Wednesday, January 28, 2026 11:16 am by Digital Herald Team | Published by: Digital Herald Team on Wednesday, January 28, 2026 11:16 am | News Categories: India